I wanted to take Cursor for a spin to see if their AI-assisted development approach lives up to the hype. I develop full apps so infrequently these days that I’d have no chance of building anything in Python without investing dozens of hours in getting back up to speed. New approaches with AI, that promise to smash that barrier, have been tempting me to start developing again. The bottom line, after giving this a try, is that while you couldn’t really go from zero knowledge to web/mobile deployment without having familiarity with things like setting up an IDE, configuring Python, managing libraries, dealing with hosting providers, DNS, deploying web apps, etc., tools like Cursor can reduce the learning curve and complexity of the entire process significantly - and it can tell you how to do many of these things if you know what questions to ask.

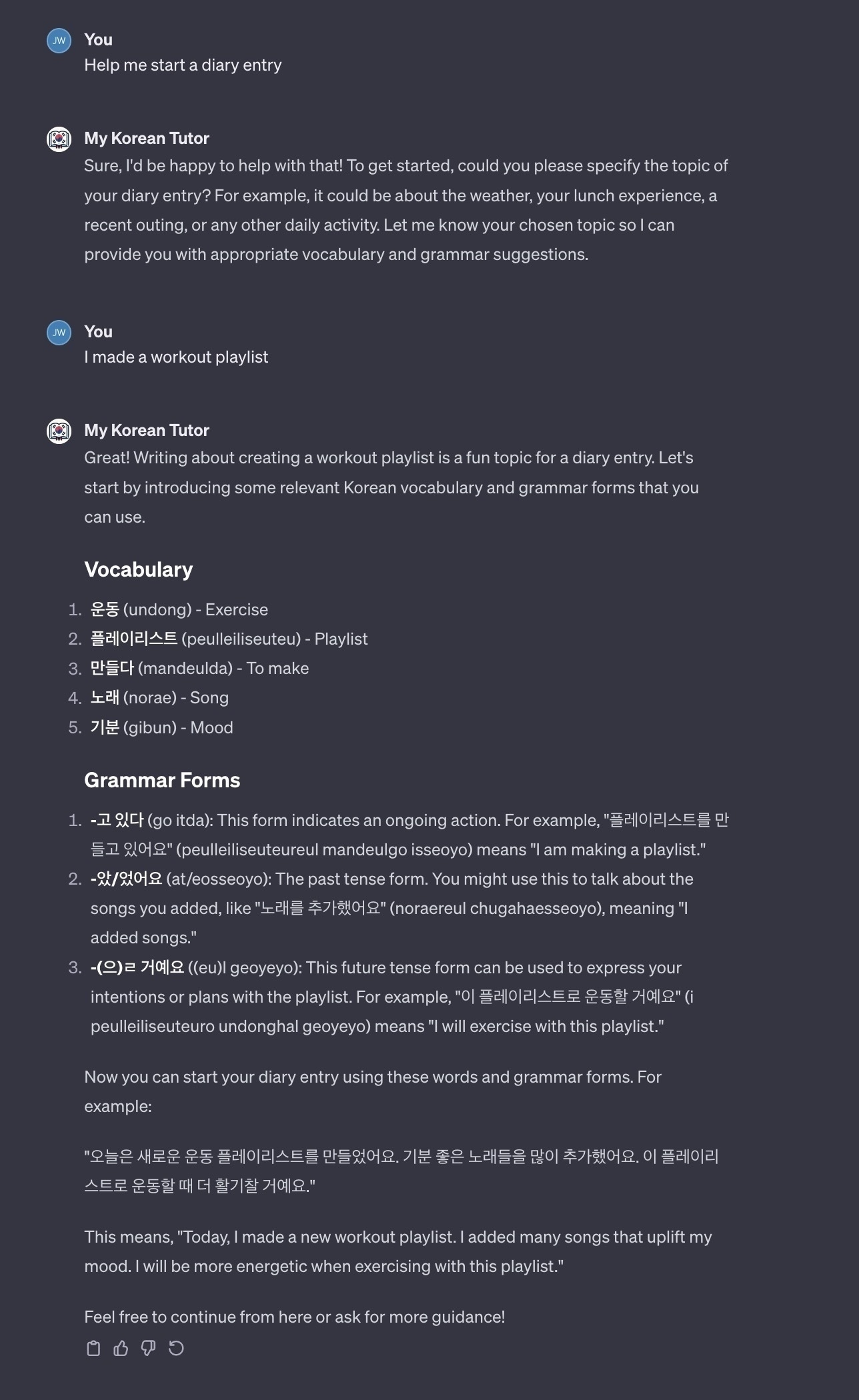

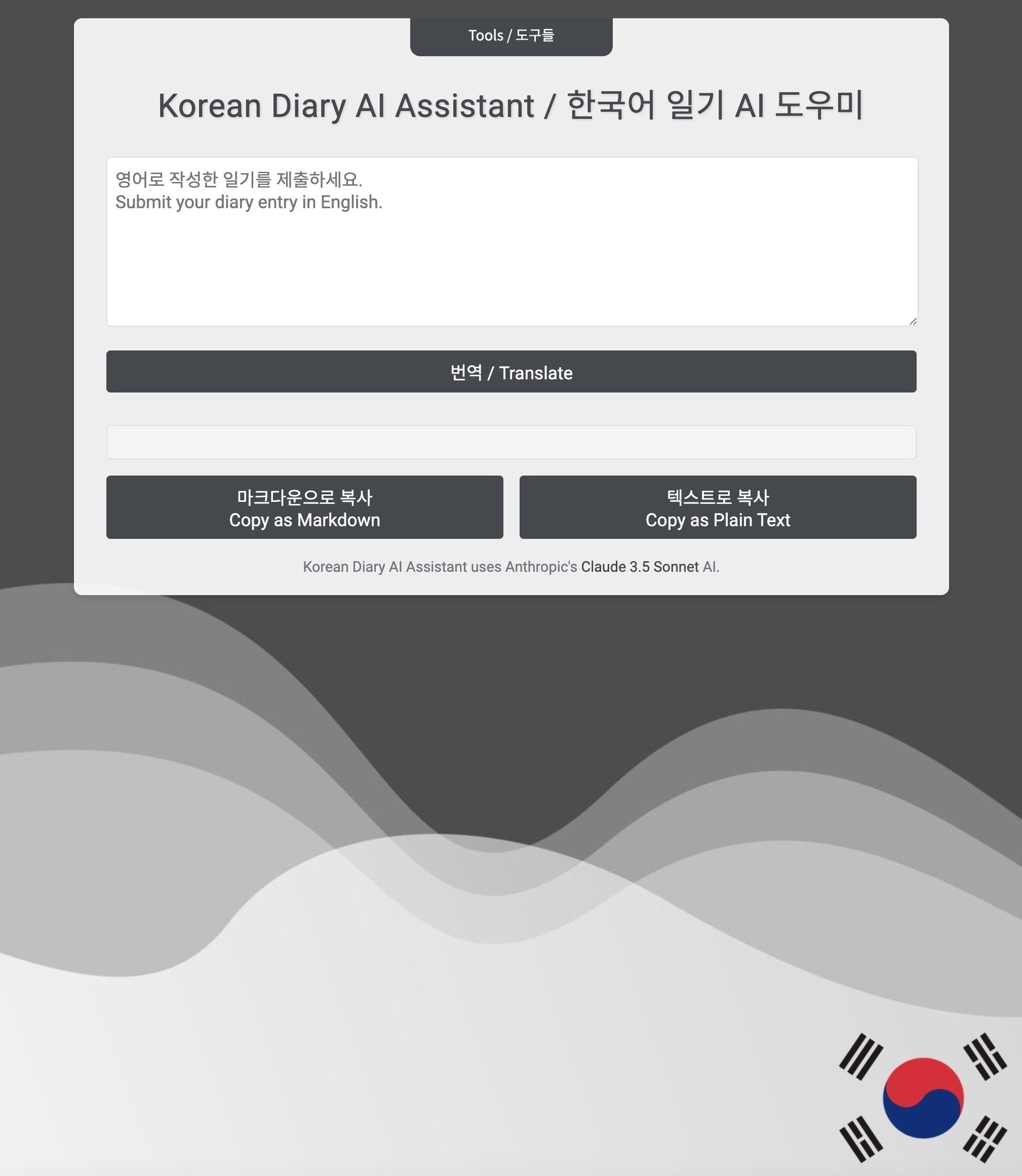

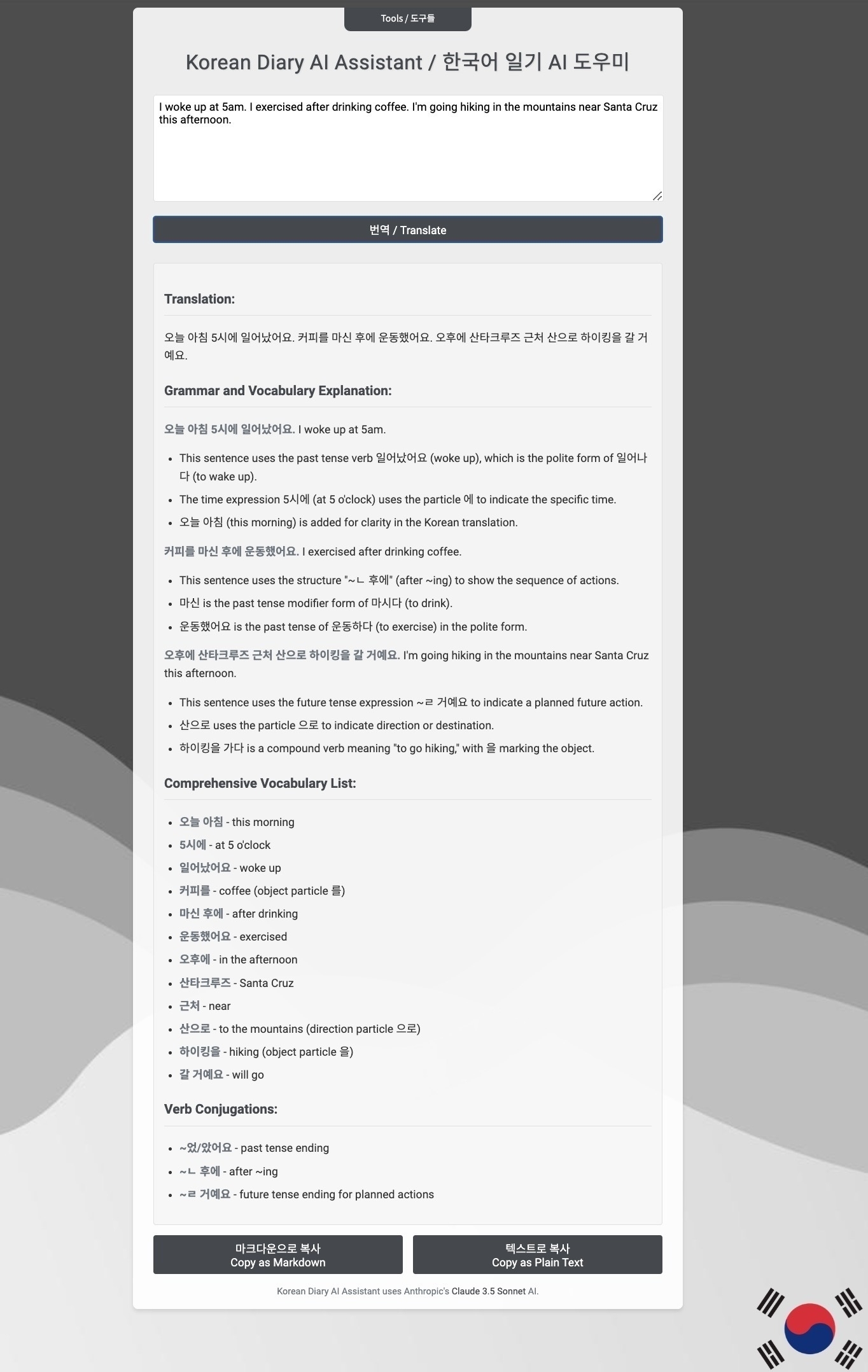

My first simple app is a Korean Diary assistant that I built to help me write Korean sentences and break down the grammar and vocabulary in those sentences. Anthropic’s Claude 3.5 Sonnet handles the translation. It’s a fairly simple app and UI (there are some nice scrolling animation effects for the menu at the top, but that’s about it). The user can also export the translation and breakdowns via markdown that is formatted as shown on the screen or via plain text.

A few observations from the experience:

- I didn’t read or manually edit any of the Python, HTML, or CSS during development. I simply (by design) engaged in conversation with the model in a constant cycle of build, test, debug, build, test, etc.

- A functional prototype with a basic system prompt can be up and running in minutes. Most of the few hours spent on this were in trying to get the model to understand my intent for the UI.

- I deployed this on Python Anywhere. Deploying a web app there requires a fair amount of its own configuration, but Claude 3.5 Sonnet (which is also the model I used in Cursor itself) completely understood that context and could provide assistance there as well.

- The collaborative experience with the AI made the whole process feel like working with a developer who instantly translated your requirements into testable code. It didn’t always work on the first try; in fact, it often didn’t, but through precise feedback, it eventually got there.

- Understanding software development and having a lot of legacy knowledge (like working via command line) is still pretty important - and the process can break down pretty quickly as the complexity of the codebase increases. However, these are early days, and it’s clear that the software development process faces fundamental changes.

Unfortunately I can’t make this public due to the cost of the Anthropic API.