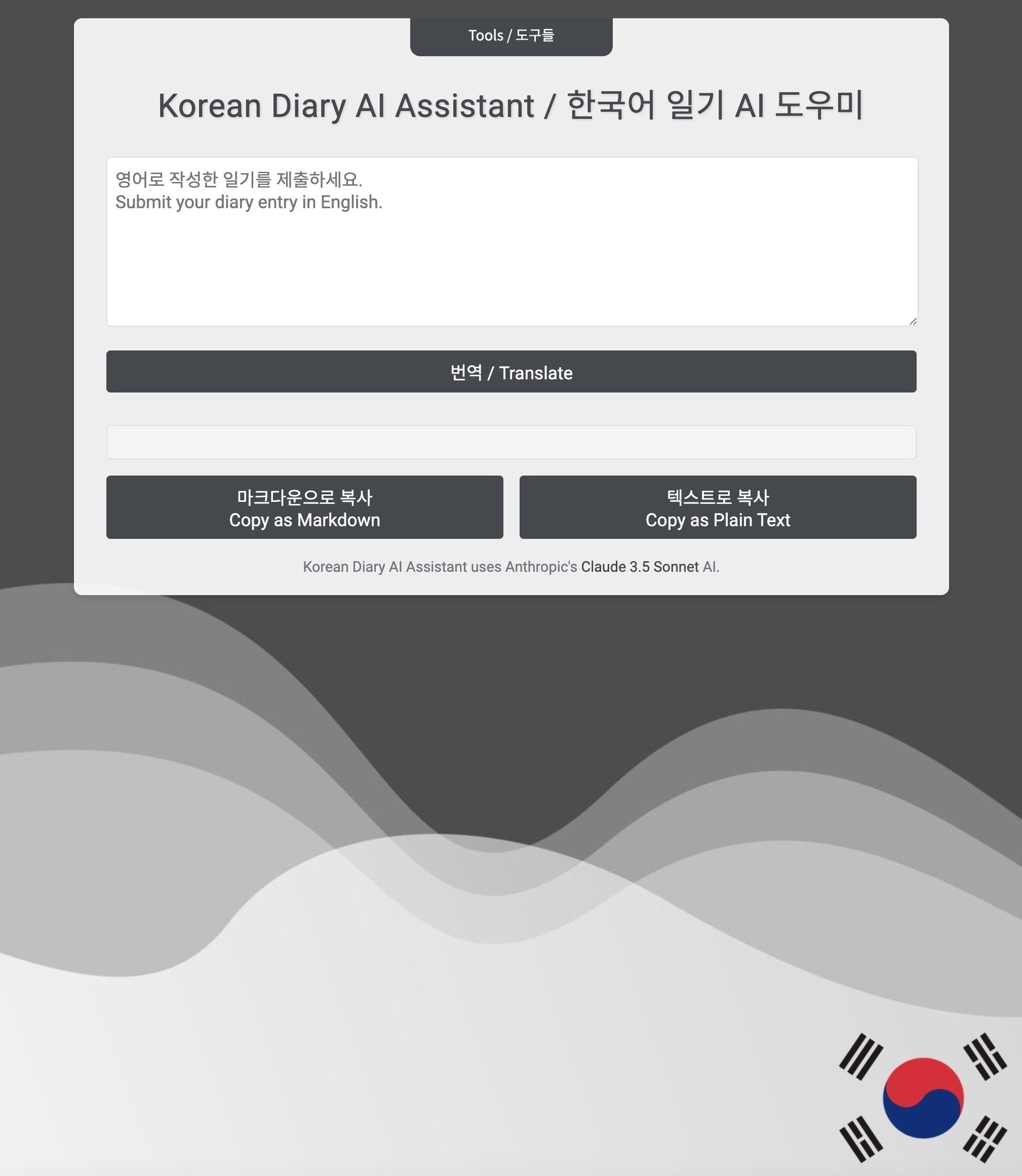

I recently received my Rabbit R1 AI companion and (as promised) wasted no time putting it through its paces. With its sleek design and promising features, I’ve been looking forward to exploring the limits of what it can do – and where it would inevitably fall short.

A common criticism of the Rabbit R1 AI companion is that it should have been developed as an app on smartphones, rather than standalone hardware. While this may seem like a more conventional approach, the Rabbit team has intentionally chosen to take a different path. They’re striving for a “post-app” future where human-machine interactions are rethought and reimagined, and they believe that custom-designed hardware is essential to achieving this vision. By separating their AI companion from the constraints of traditional mobile devices, the Rabbit team can focus on creating a more holistic computing experience that seamlessly integrates into daily life. While this approach is unlikely to succeed, I’m intrigued by their willingness to challenge conventional wisdom and explore new frontiers in human-computer interaction.

The Good

Let’s start with the positives. The design by Teenage Engineering is simply impeccable. The Rabbit R1 looks and feels like a premium product, with a build quality that’s top-notch. When you hold it in your hand, you can’t help but be impressed by the attention to detail. And none of the photos or videos do justice to the color – it’s much more vibrant in person.

The user interface is also well thought out and executed, even if it’s not without its bugs. The model response time is quite good, and I have no doubt that it will only improve as the team continues to refine their technology.

One of the standout features for me was the “Rabbit Hole” – a web site that stores your interactions with the model, transcribed audio, and voice notes. It’s an incredibly useful feature that offers a level of transparency and control that I appreciated.

The price, $199 without a subscription, is also a standout. I’d pay more for a much more robust and polished version of a device like this but this is a fair price for the R1 at this stage of its life. I knew before I ordered it that it would arrive as a work in progress with significant potential to be junk drawer filler but the relatively low price and interesting tech made the risk worth taking.

The Not-So-Good

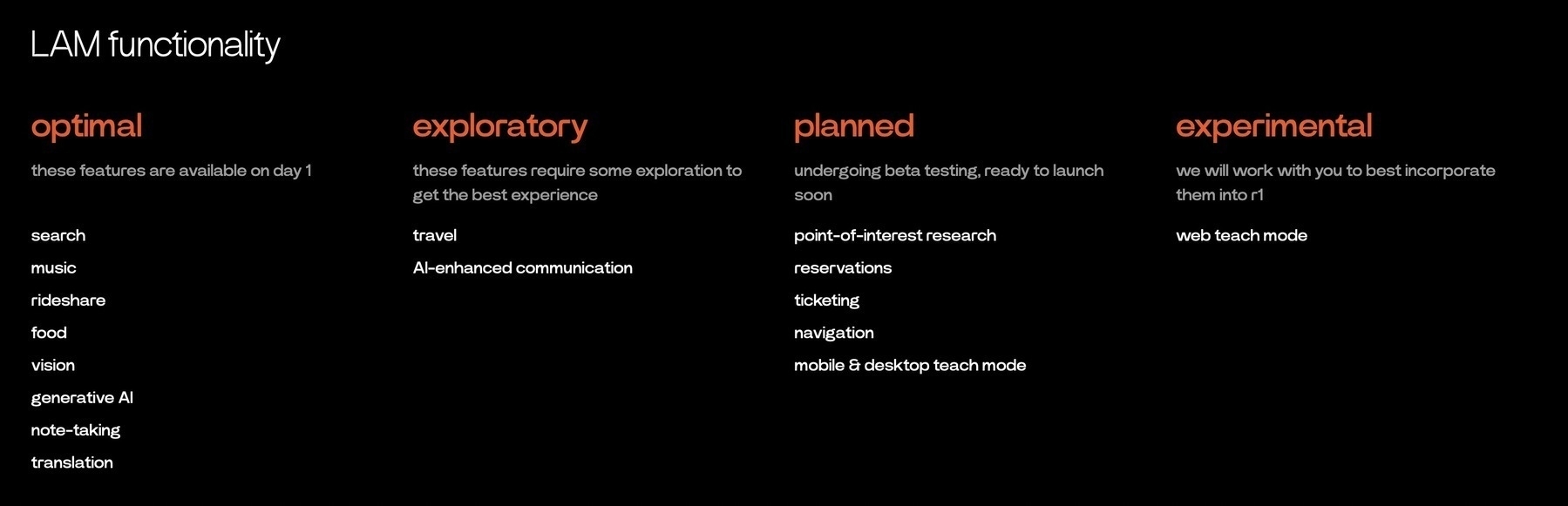

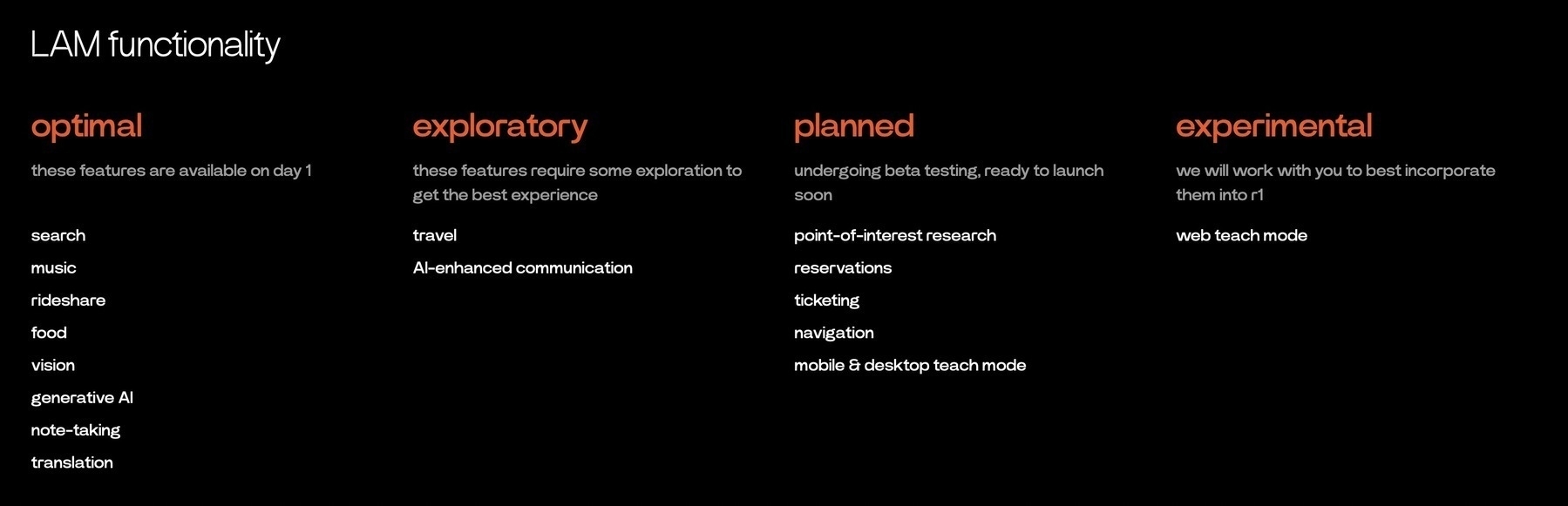

Of course, no category-launching product is perfect, and the Rabbit R1 is no exception. One of the biggest drawbacks is the lack of features – navigation, integration with popular apps and tools, alerts, alarms, reminders, email, and more are all on the roadmap but not yet available.

I also experienced some issues with hallucinations – instances where the model responded in a way that wasn’t entirely accurate or relevant. While this isn’t unique to the Rabbit R1, I did find it to be a bit too frequent for my taste.

Battery life is another area where the device falls short. As with many new tech devices, the battery life is not great out of the box – but I’m confident that the team will address this issue in future updates (and they did a day after posting this).

Security and Privacy Concerns

As someone who values security and privacy, I have to express some concerns about the Rabbit R1. While the risk is currently low - I’m not too concerned about it having my Spottily credentials and I won’t record sensitive information, I don’t feel comfortable giving it access to my critical accounts and information – at least not until the team can demonstrate more even transparency, auditing, and robustness in their end-to-end architecture. This isn’t just a challenge for the Rabbit team, it is something that will require a lot of thought from the entire industry as we migrate from apps to agents.

Customization and Voice Control

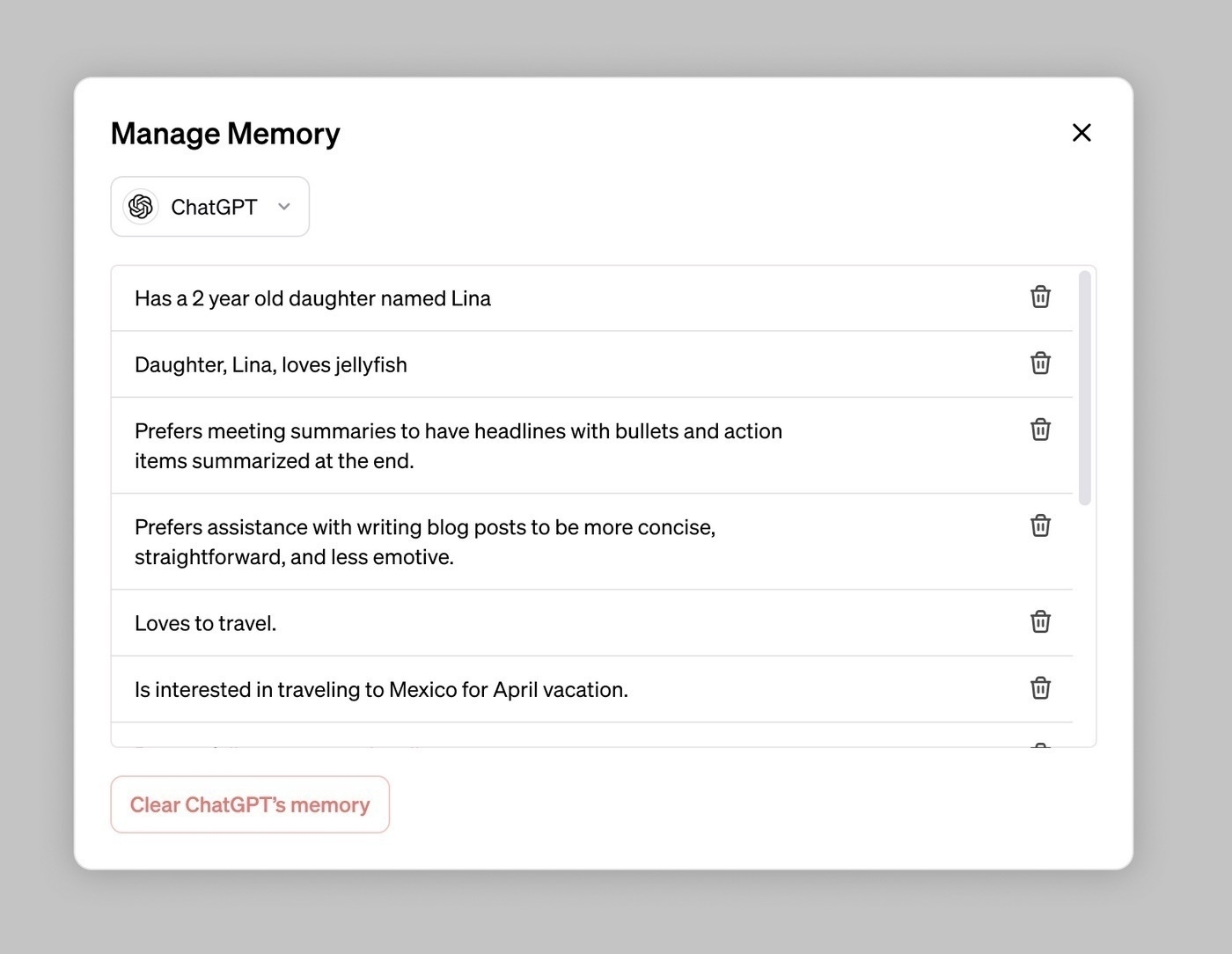

Finally, I want to touch on two additional areas where I think the device could be improved: LLM memory and customization, as well as voice control. While you can give the model voice instructions to customize its responses to you, it seems that these changes are limited to the current session – which is not a huge deal for me at the moment, but is something that we will come to expect from our “AI companions.”

And speaking of voice control, I appreciate the inherent security of the push-to-talk button but would love to see an option for a wake word or hands-free use. It would be fantastic to be able to plug this device into a docking station at my desk and use it as a dictation tool or virtual assistant without having to constantly reach for it. I generally want to do as much as I can with this device via hands-free voice.

Conclusion

In conclusion, the Rabbit R1 AI companion is far from fully polished – but that’s to be expected from an emerging technology. The team is actively responding to user feedback on social media and rolling out frequent fixes and updates, which bodes well for its future development.

While there are certainly areas where the device falls short, I believe that it has some near term potential. If you’re an early adopter who understands what to expect from this technology, then the Rabbit R1 might be worth considering. Just remember to temper your expectations and be patient – this is a product that’s still finding its footing. The good news is that the team is actively engaging with users on social media and regularly rolling out over the air updates.

TLDR: The Rabbit R1 AI companion is a promising but imperfect device that shows potential. While it has some drawbacks, I believe that it will continue to evolve and improve with time. If you’re willing to take the leap and join the early adopter crowd, then this might be worth considering – just don’t forget to keep your expectations in check. It is immature, but it knows what it wants to be when it grows up - time will tell if it gets there.

This review was mostly written by Meta’s Llama 3 from my robust notes on the R1.