Devin just took our jobs:

Devin just took our jobs:

Figure just dropped a jaw-dropping progress update:

The thread linked above has a lot of interesting tech details but make sure to watch the demo video. A year ago I was telling people that these models would allow us to make 50+ years of progress in humanoid robotics in the next decade but it is increasingly looking like we’ll achieve that in two or three years. And if you think progress is head-spinning now (and it is) just wait until there are millions of these moving about the real world, learning every second, and benefiting from their collective experience.

Robotics and embodied AI just got a huge shot of energy (and capital) with Figure’s latest funding round:

- Investors include Microsoft, the OpenAI Startup Fund, Nvidia, the Amazon Industrial Innovation Fund and Jeff Bezos (through Bezos Expeditions).

- Others include Parkway Venture Capital, Intel Capital, Align Ventures and ARK Invest.

- The $675 million Series B funding round “will accelerate Figure’s timeline for humanoid commercial deployment,” the company said in a release.

The intrigue: Figure and OpenAI will also collaborate to develop next-generation AI models for humanoid robots.

- This will combine “OpenAI’s research with Figure’s deep understanding of robotics hardware and software,” the companies said.

- The partnership “aims to help accelerate Figure’s commercial timeline by enhancing the capabilities of humanoid robots to process and reason from language.”

Read the full story at Axios.

The Slaves, one of my favorite bands ever, seemed to be derailed by the pandemic and some personal tragedies but they have resurfaced as Soft Play and that makes me really, really happy.

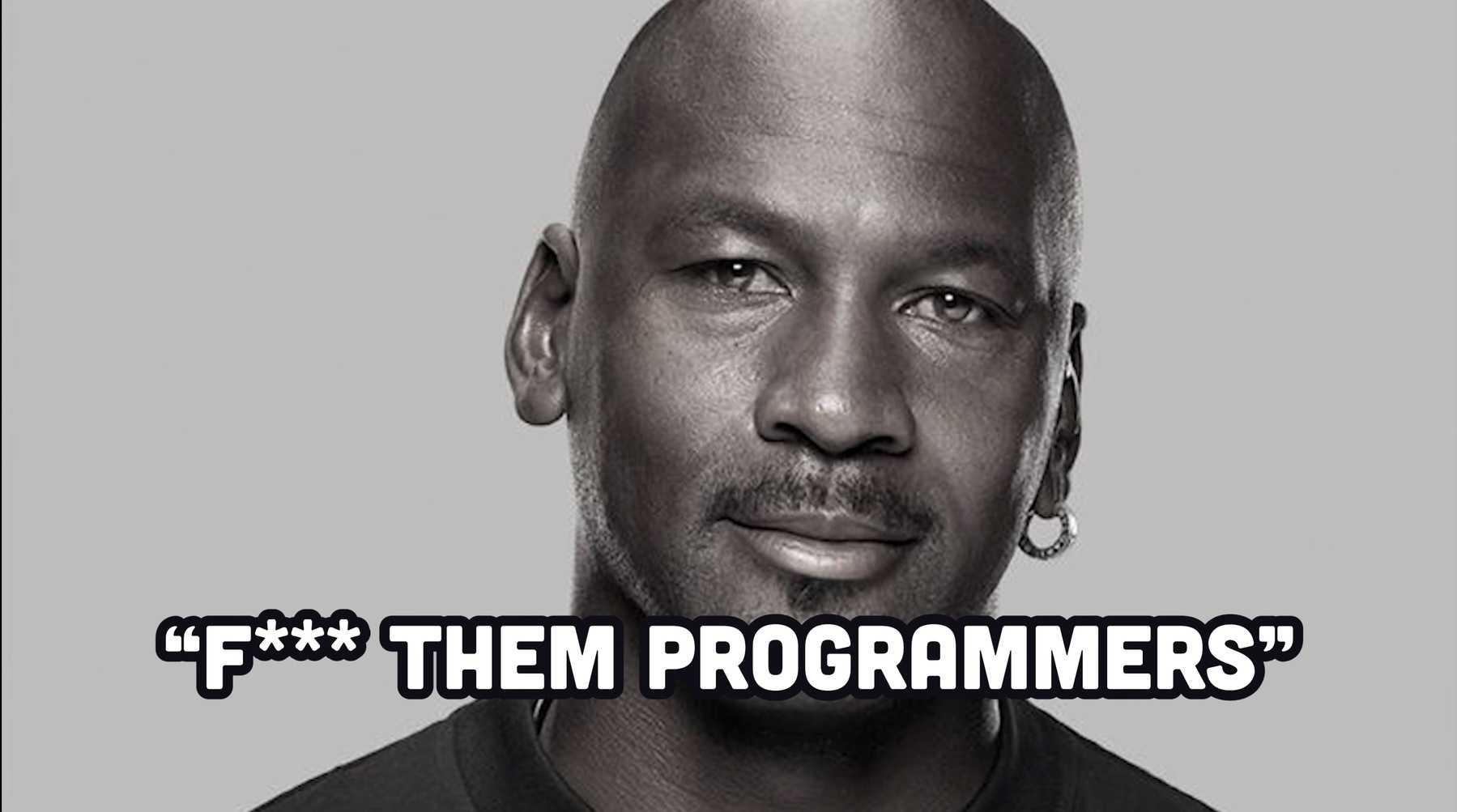

OpenAI is starting to add some basic memory capabilities to ChatGPT. Of course, AI won’t be able to be your personal assistant, primary computing interface, or serve us in any kind of ongoing relationship with out some level of persistence - and more likely near total persistence by the time we approach maturity with some of these concepts. The privacy and security challenges will dwarf the many still unresolved privacy and security challenges that we struggle with today. It will all get even more challenging as traditional interfaces fall away in favor of an AI-first approach. This will happen faster than people think.

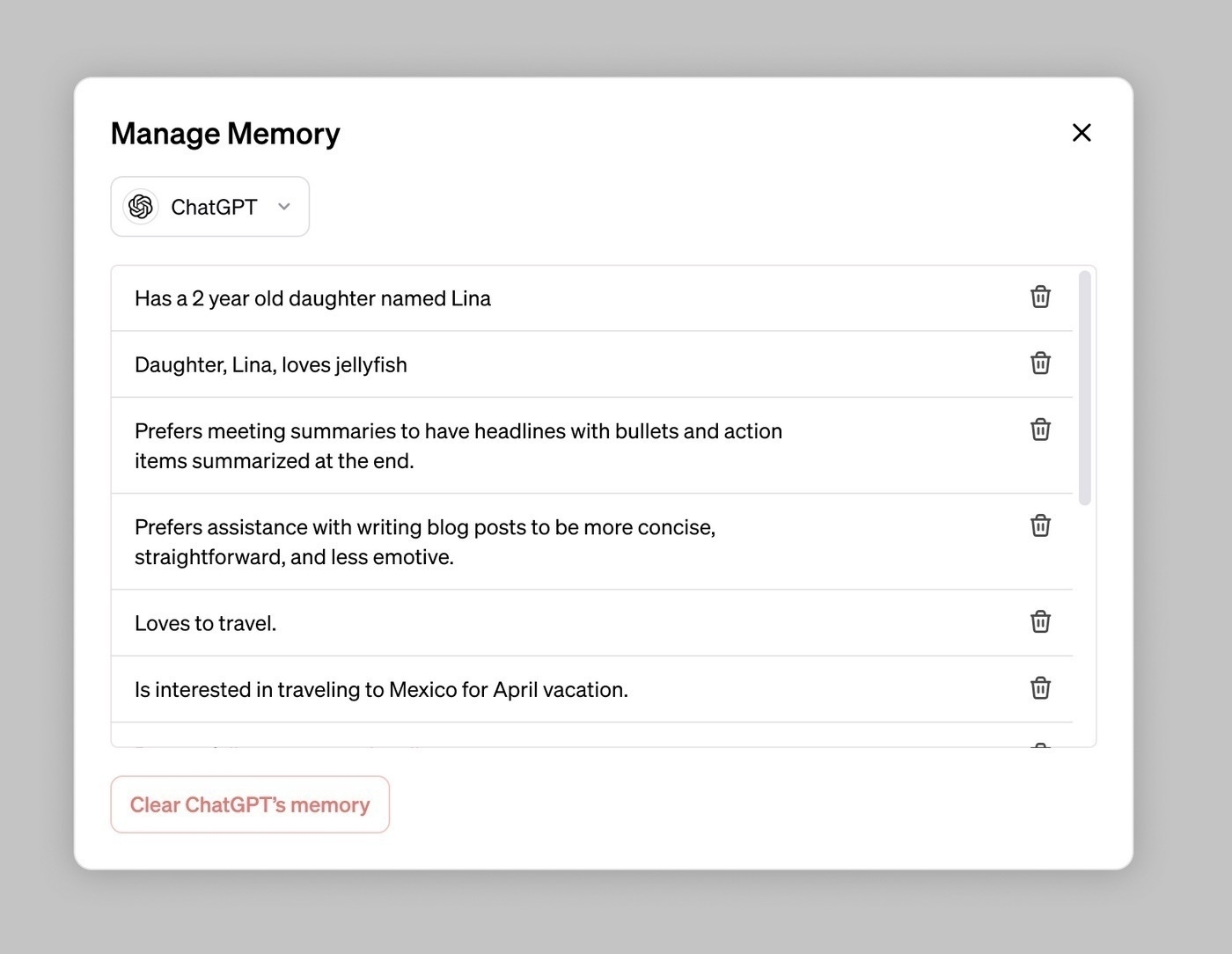

Another AI device worth tracking - Brilliant Labs' Frame glasses appear to do exactly what you’d expect this generation of AI glasses to do. This will definitely be a successful form factor for AI delivery but I find the novel approaches seen in the Rabbit R1 and Humane devices more interesting at the moment. I don’t expect to see a single winner in the AI device space. We will, quite soon, just be serving up AI access and functions through virtually everything we make.

I haven’t spent much time on Bluesky, or any social platform to be honest, but I did get around to playing with feeds there and built out a few if you’re interested:

Russia and NATO

Tracking discussions about NATO that include mentions of Russia, the Kremlin, or Putin

Intelligence

Tracking mentions of intelligence related keywords and a few agency names

Disinformation

Tracking mentions of disinformation, information operations, influence operations, deepfakes and other related terms

AI Ecosystem

Tracks mentions of major AI companies and products

New Space

Tracking mentions for dozens of “New Space” industry companies including Rocket Lab, SpaceX, Relativity, Firefly and others. SpaceX mentions are omitted due to the fact that they would overwhelm content referencing lesser known companies.

Rocket Lab

Tracking mentions of Rocket Lab, Its Electron and Neutron rockets, and its founder Peter Beck

Humanoid Robotics

Tracking mentions of humanoid robots and several companies leading in the space including Figure, Tesla, Apptronik, Sancuary, Agility and others

Rabbit R1

Mentions of the Rabbit R1 AI device.

You can follow me there at blogsofwar.bsky.social

Deck.blue is a really solid shot at a Tweetdeck clone by developer Gildásio Filho. It’s definitely worth checking out, especially now that Bluesky registrations are open.

It took me forever but I finally got around to picking up a Flipper Zero. I have a ESP32 GPS and CC1101 Board on the way as well - for educational purposes of course. I’ve managed to get the Flipper setup with Flipper Xtreme firmware and play with it a bit. So far, I haven’t been disappointed. The amount of depth in this device, especially when enhanced with custom firmware, is insane. It can open up a near limitless number of rabbit holes for you to go down if you can find the time.

If you’re interested, Exploiting the Tangible: Hands-On Pentesting Techniques with Flipper Zero, is a pretty good overview of its capabilities (and responsible use).

I’ve been tracking humanoid robotic startup Figure for a while but they are just now, thanks to a string of significant progress updates, starting to really grab people’s attention in a significant way. This interview with CEO Brett Adcock is the most revealing update that I’ve seen to date.

AI and robotics are enablers and drivers for each other’s development in a very profound way. I think we’ll see more progress in the next 3-5 years than we have seen in the previous couple of decades and the capability/deployment curves will just rise exponentially from there.

This set by DJ Oneloop out of Nagaoka city, Nigata, Japan is perfection.

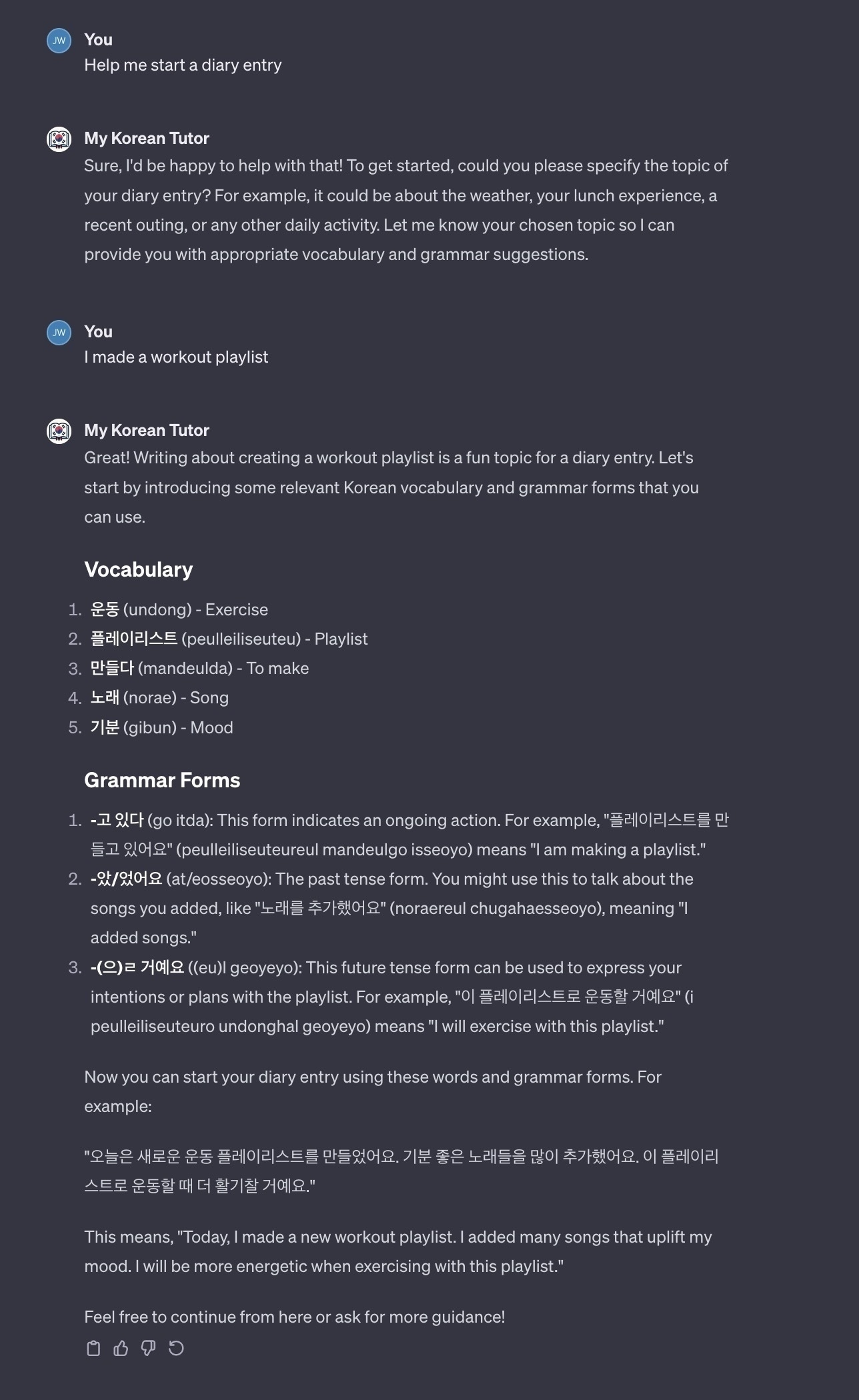

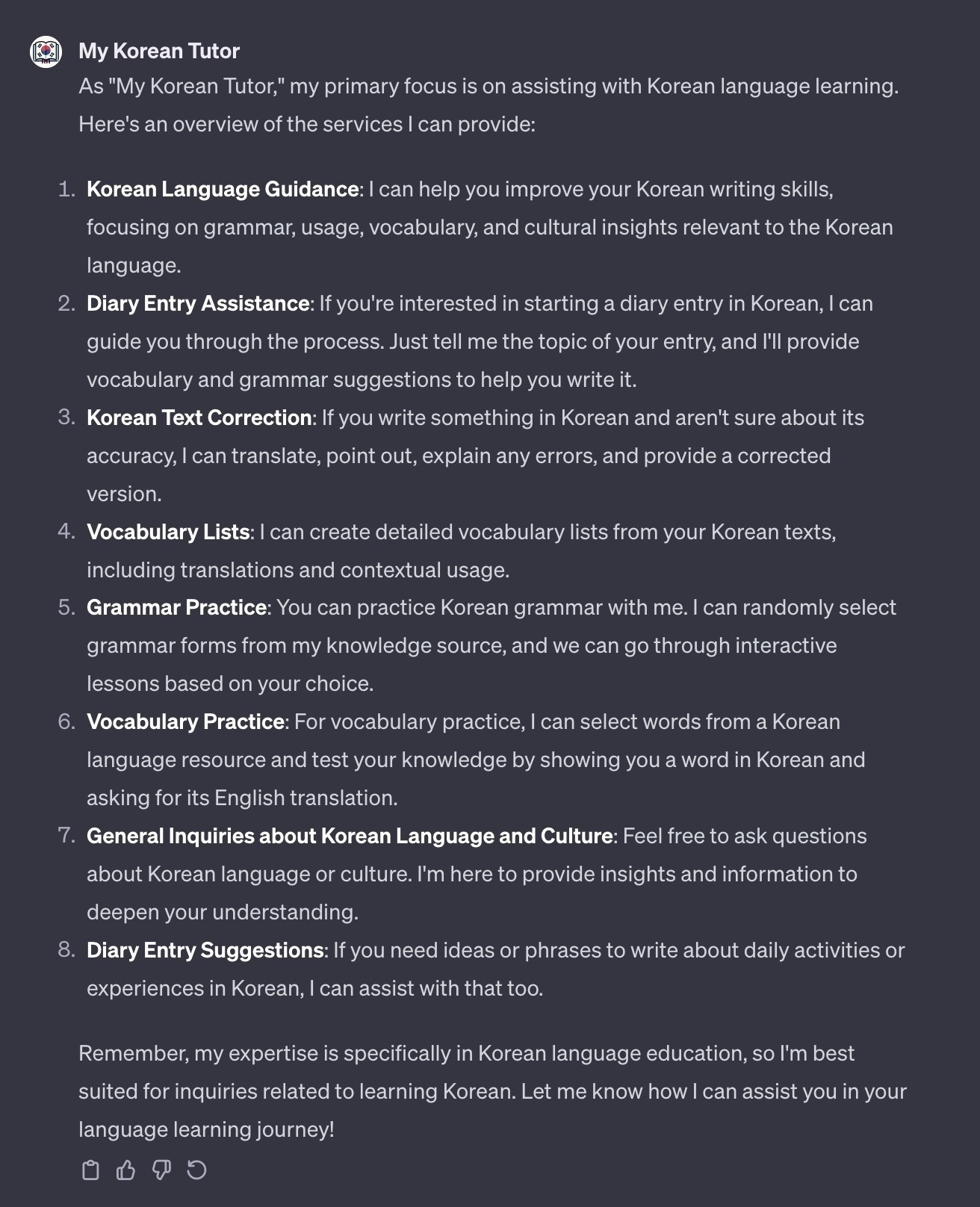

I’ve been working with LLMs for language learning over the past year and have found them extraordinarily useful. The moment OpenAI introduced custom GPTs I immediately rushed to create My Korean Tutor to address some specific challenges for Korean language learners.

It does a lot, but my primary goal in creating it was to make the process of daily journaling in Korean a little bit easier for learners who are still struggling to acquire vocabulary and basic grammar. My Korean Tutor helps reduce the friction by letting the user propose a topic and then giving them some basic vocabulary, grammar, and examples that they can use to get started.

As for the other features, some of them are visible via action buttons but if you ask it “What can you do?” it will reveal even more features:

If you’ve seen the Rabbit r1 but found it confusing this short Rough Guide to the Rabbit r1 might help:

Basically, it’s like a very smart 10 year child with perfect recall who does exactly what you tell it to. It can watch and mimic what you do on a computer, sometimes with uncanny accuracy. Whether you’re editing videos and could use an “auto mask” assistant, or you’re always booking trips online and get tired of the details, you let the Rabbit watch what you do a few times, then, it just…does it for you.

Technically, a Rabbit is an agent, a “software entity capable of performing tasks on its own.” Those of us with a love of sci-fi will remember the hotel agent in the book Altered Carbon; we ain’t there yet, but we’re getting closer.

Of course we’ll have to see how it actually lives up to that promise once units land in users hands in April/May. I should be receiving one then. I’ll share my thoughts soon after. FWIW I don’t expect it to be perfect and it doesn’t have to be perfect to be a success. The real question is: does this human-machine interface approach and its software layer show promise? Is there enough of a foundation there to build on or is it destined for the Museum of Failure? Their pricing strategy, keeping it cheap and subscription free, shows that they understand that there’s both an attraction to, and presumption of failure for, a device like this. I think we’ll know if they can clear that hurdle soon after it drops.

This is the Rabbit R1, an AI first device with a novel OS and form factor that is aiming to be something more than phone but not quite a complete computer replacement. It’s shooting for that holy grail slot of AI companion.

We’re destined to soon start filling junk drawers filled with several generations of these AI devices before someone gets it right and this one will almost certainly end up there as well. Still, there are some interesting ideas and design choices at work here, and the price ($199 - no subscription), is right so of course I ordered one immediately.

You can see a demo here.

If you didn’t watch the videos Google dropped with its Gemini announcement yesterday I highly recommend carving out a half hour or so of your time to do so. They’re excellent demonstrations of the power of multimodal AI models and just a hint of what is about to explode into every aspect of your personal and professional lives - no matter what you do and who you are. Get ready for it.

The announcement post and associated technical report are super interesting - if you’re into that sort of thing. 2024 is going to be wild.

Chair, Lord Lisvane, and committee member, Lord Clement-Jones discuss the recent findings of The House of Lords Artificial Intelligence in Weapon Systems Committee in this video:

Firing up the pressure cooker and kicking off chicken soup season.

The recipe (for an 8QT pressure cooker) since someone asked:

10 cups chicken broth/stock or whatever combination of the two you like

4 Boneless chicken breasts

2 Serrano peppers

2 limes (1 squeezed in for cooking - 1 after)

Small fingerling potatoes

Diced roma tomato

Garlic paste

Ginger paste

Salt / Pepper

Bay leaves

Thyme

Basil

Carrots

Diced onion

White mushrooms

Pressure cook everything on high for 15 minutes, remove and shred the chicken, return it to the soup and pressure cook for another 5 minutes. If you’re not familiar with cooking with a pressure cooker this video might help.

It’s hard to believe that a film from 1970 could get so much about AI so right, but ‘Colossus: The Forbin Project’ does exactly that. I won’t reveal any spoilers, but its exploration of issues related to alignment and emergent behaviors was prescient. It’s also a really entertaining film, albeit a bit dark.

I fed the plot to ChatGPT and asked it to create the image above. It seemed like the appropriate thing to do. You can stream Colossus: The Forbin Project for free at the Internet Archive.

A team of researchers from Rutgers and the University of Michigan have developed WarAgent:

“…the first LLM-based Multi-Agent System (MAS) that simulates historical events. This simulation seeks to capture the complex web of factors influencing diplomatic interactions throughout history”

They used it to simulate events related to both world wars and published their findings in War and Peace (WarAgent): Large Language Model-based Multi-Agent Simulation of World Wars. This is really exciting stuff.

Here’s the abstract:

Can we avoid wars at the crossroads of history? This question has been pursued by individuals, scholars, policymakers, and organizations throughout human history. In this research, we attempt to answer the question based on the recent advances of Artificial Intelligence (AI) and Large Language Models (LLMs). We propose WarAgent, an LLM-powered multi-agent AI system, to simulate the participating countries, their decisions, and the consequences, in historical international conflicts, including the World War I (WWI), the World War II (WWII), and the Warring States Period (WSP) in Ancient China. By evaluating the simulation effectiveness, we examine the advancements and limitations of cutting-edge AI systems’ abilities in studying complex collective human behaviors such as international conflicts under diverse settings. In these simulations, the emergent interactions among agents also offer a novel perspective for examining the triggers and conditions that lead to war. Our findings offer data-driven and AI-augmented insights that can redefine how we approach conflict resolution and peacekeeping strategies. The implications stretch beyond historical analysis, offering a blueprint for using AI to understand human history and possibly prevent future international conflicts. Code and data are available at github.com/agiresear…

H/T Ethan Mollick

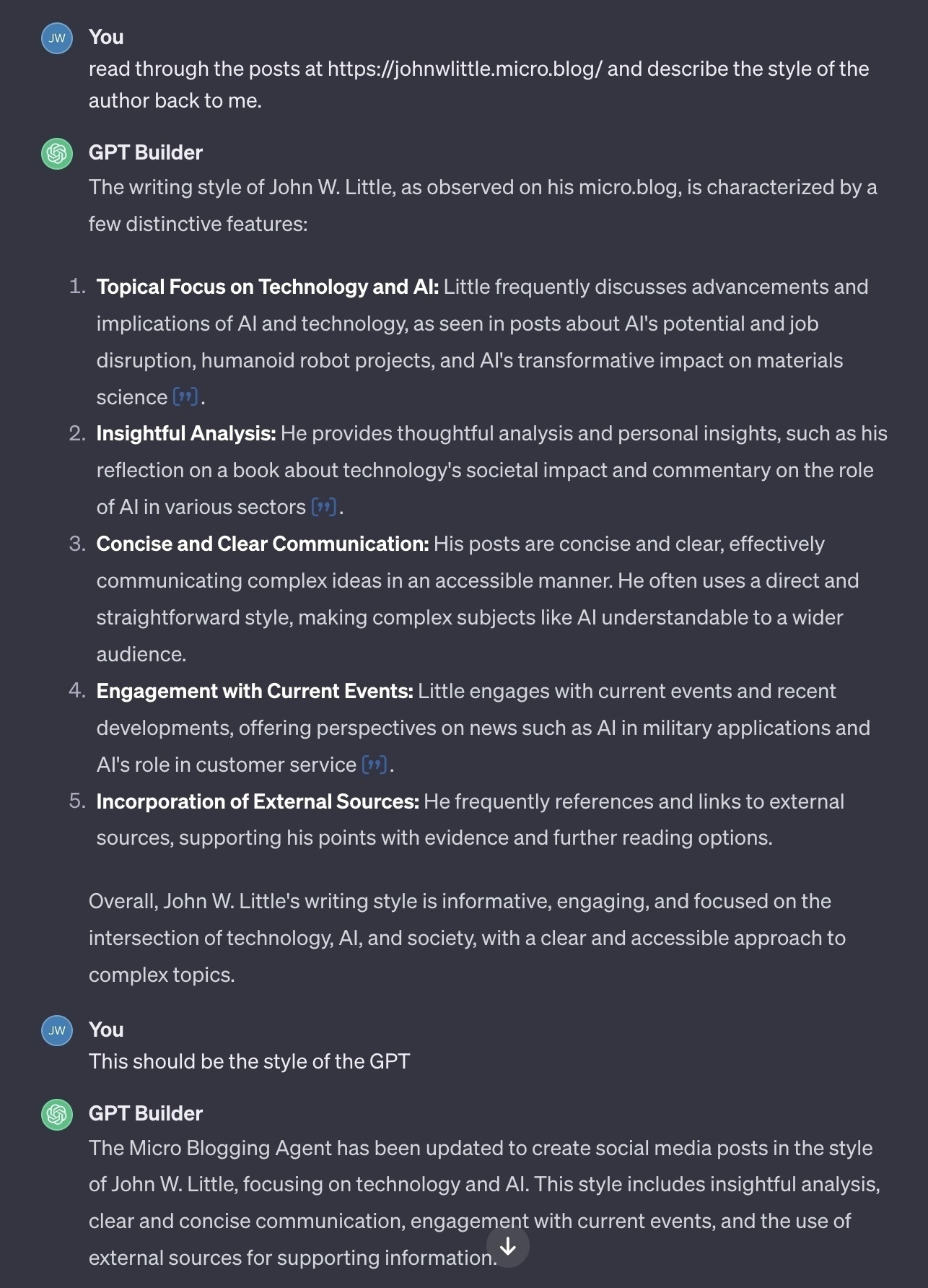

As mentioned earlier I have been constantly creating custom GPTs (both public and private) as technology demonstrators or to offload or minimize repetitive tasks. I just finished creating one to help me stay on top of some of the topics I post about here. This screenshot illustrates how simple this process can be.

It’s important to note that I’m not using this to automate blogging or social media posts (although that’s certainly possible). It’s just a handy tool that helps me stay on top of a number of rapidly evolving topics - a research assistant.

This is an excellent deep dive on AI’s potential and the resulting jobs disruption that will follow - and why you should be adapting in response now.

Kicking off the weekend with a vinyl session from Spain’s Dj Fonki Cheff 🎵